Rethink Code Reviews

Jun 22, 2025

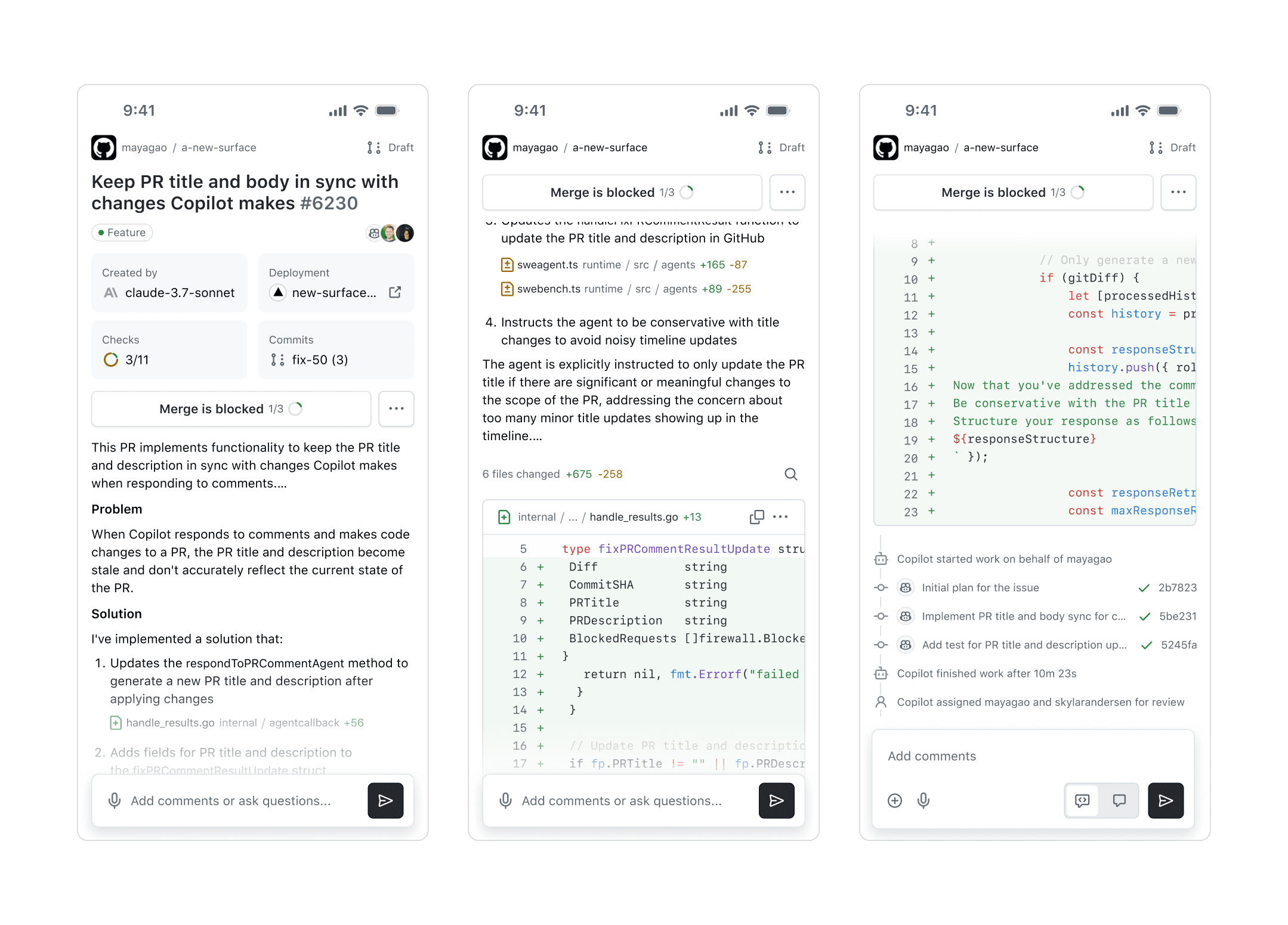

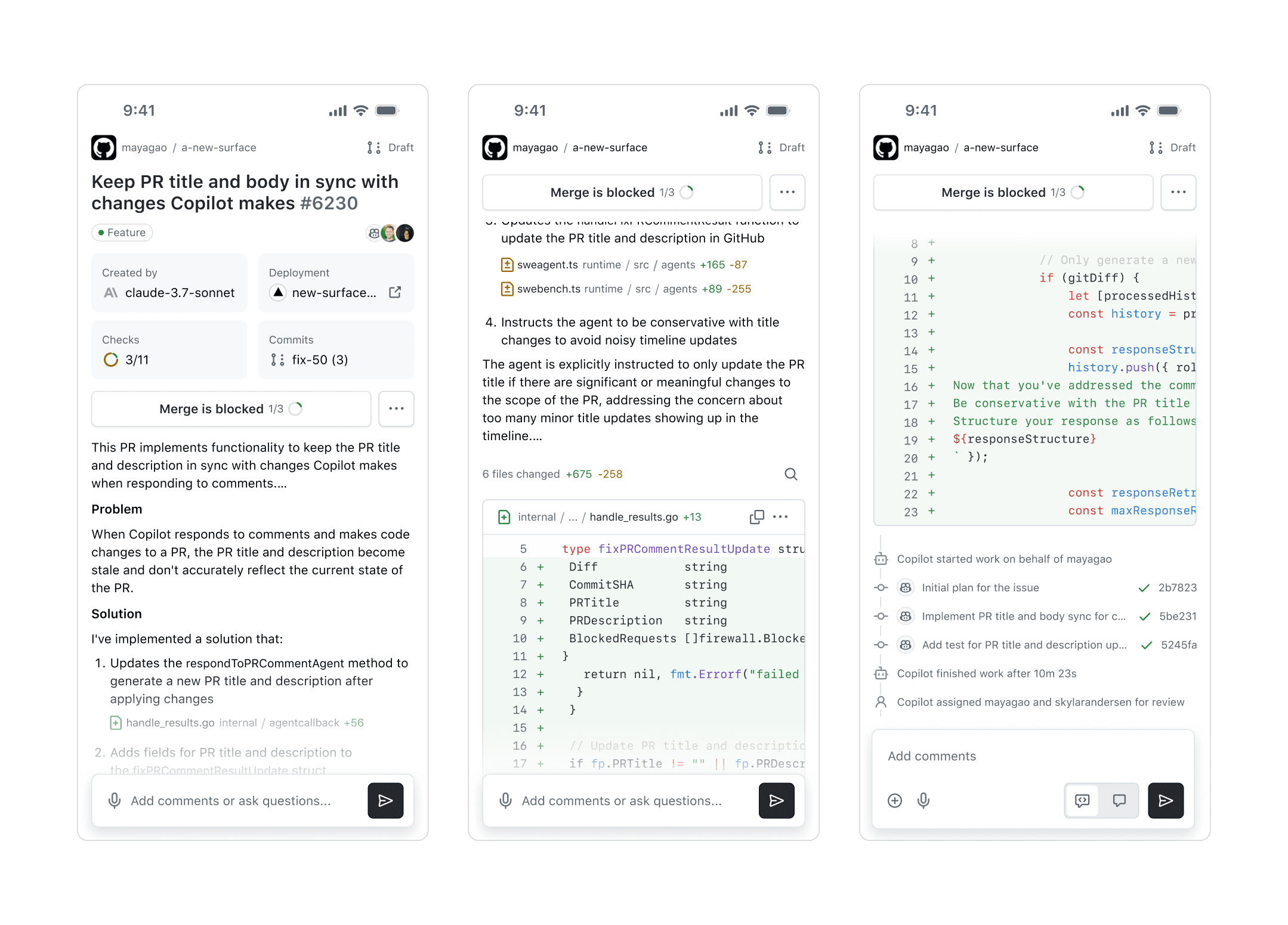

Reimagine a simplified version of code review on GitHub

Reimagine a simplified version of code review on GitHubCode review is evolving. As AI-generated code becomes prevalent, so does the complexity of reviewing it: validation (checking functionality and catching edge cases) and understanding (evaluating deeper implications and compliances) are getting more complex, contextual, and individualized.

So how do we build reflective1, not just reactive, review tools? After spending the past month exploring this space, a few thoughts:

Foundation still matters

Clarity of structure is more important than ever. Any lack of focus and clear hierarchy will only get amplified with more complex, automated tasks. But this also presents an opportunity: we can, and should, create unified2, efficient interfaces make both automated and manual work easier to navigate.

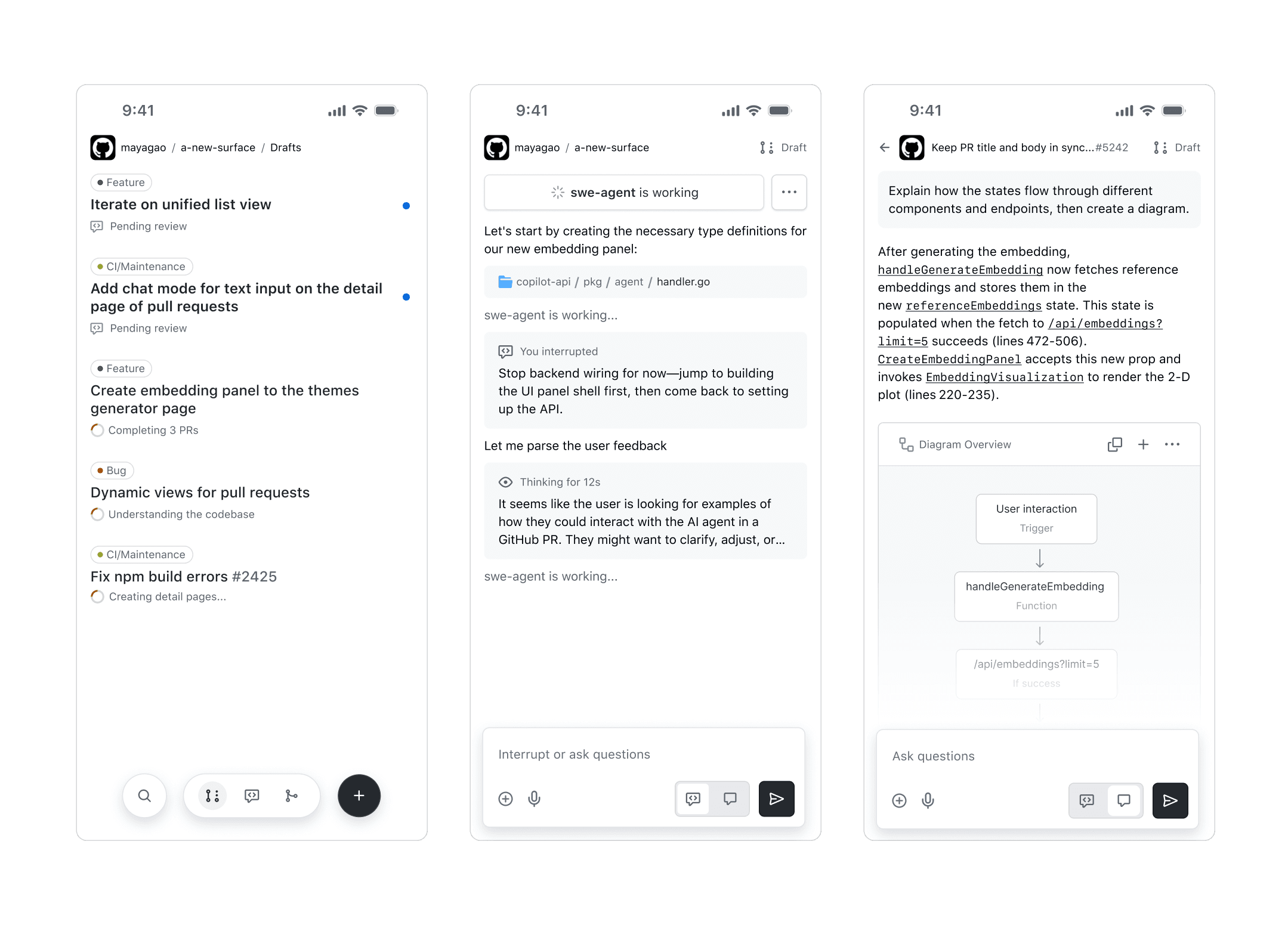

The fluid, dynamic nature

The boundary between generating and understanding is dissolving. People might need to frequently switch between asking questions, steering actions, and defining or managing tasks. Should tools specialize by task? Or converge into a single, adaptable surface? Likely somewhere in between.